Rootmanual:Proxmox: Skillnad mellan sidversioner

Tobbez (diskussion | bidrag) |

Hugo (diskussion | bidrag) |

||

| Rad 42: | Rad 42: | ||

== Storage == |

== Storage == |

||

=== Lägg till ny ISO att boota från === |

=== Lägg till ny ISO att boota från === |

||

Babelfish exoprterar iso-storage vis autofs till /mp/iso på alla lysatormaskiner som stödjer det. Mappen ska vara skrivbar för alla rötter, och läsbar av samtliga. |

|||

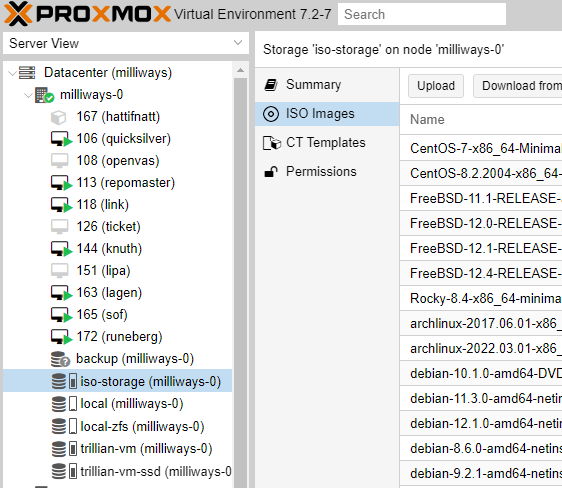

[[Fil:8C6MwuX.png]] |

|||

=== Configuring iSCSI on a storage server === |

=== Configuring iSCSI on a storage server === |

||

Nuvarande version från 1 oktober 2023 kl. 13.08

Instructions for managing and using our setup of Proxmox.

NOTE: If your cursor gets stuck in SPACE, 'use ctrl + alt + r' to release the cursor.

Creating a virtual machine

- Connect to the management interface of one of the compute nodes (e.g. https://proxar.lysator.liu.se:8006/ or https://proxer.lysator.liu.se:8006/) and log in.

- Click Create VM in the top right corner.

- General tab:

- Set a name for the VM

- Choose compute node for the VM to run on

- Add it to the appropriate resource pool

- OS tab: Pick Linux 3.X/2.6 Kernel (l26)

- CD/DVD tab: pick an ISO to install from.

- Hard disk tab:

- Change Bus/Device to VIRTIO

- Decide on a disk size. The disk image will use this much space on the storage server. If you are unsure, pick a smaller size and increase it later (10GB should be plenty for the typical VM).

- CPU tab: Allocate CPUs/cores as appropriate.

- Memory tab: allocate memory as appropriate.

- Network tab:

- Change Model to VirtIO (paravirtualized)

- If the VM will need a public IP

- Choose bridged mode

- Set Bridge to vmbr0

- If the VM will not need a public IP

- Choose NAT

- Confirm tab: Click Finish.

- Select the new VM in the tree view.

- Go to the Hardware tab

- Edit Display, and set Graphic card to SPICE.

- Go to the Options tab

- Edit "Start at boot" and set to enable if the machine should start automatically.

- Start the machine, and perform installation as usual.

Using the console

There are two options: SPICE, and Java. Don't use Java (unless you can't help it).

A prerequisite for using SPICE is the remote-viewer program (part of virt-manager).

To connect to a VM, first start the VM, and then click SPICE in the top right corner in the Proxmox management interface. This downloads a file that you pass on the command line to remote-viewer.

Storage

Lägg till ny ISO att boota från

Configuring iSCSI on a storage server

This section assumes debian.

- Follow the steps on http://zfsonlinux.org/debian.html

- Create a zfs pool:

zpool create ${POOLNAME} raidz2 /dev/sd{b..d}

- Create a block device in the zfs pool:

zfs create -V $SIZE ${POOLNAME}/vm-storage

- Install tgt:

aptitude install tgt- If your tgt version is older than 1:1.0.17-1.1, you need to manually install the init script:

mkdir tmp; cd tmp;wget http://ftp.se.debian.org/debian/pool/main/t/tgt/tgt_1.0.17-1.1_amd64.debar -x tgt_1.0.17-1.1_amd64.debtar xf data.tar.gzmv ./etc/init.d/tgt /etc/init.d/cd ..; rm -rf tmp

- Setup tgt to start automatically:

update-rc.d tgt defaults

- Create an iSCSI target:

tgtadm --lld iscsi --mode target --op new --tid 1 --targetname iqn.${YYYY}-${MM}.se.liu.lysator:${HOSTNAME}.vm-storage

- Add a LUN to the target, backed by the previously created block device:

tgtadm --lld iscsi --mode logicalunit --op new --tid 1 --lun 1 -b /filesystem/vm-storage

- Make the target available on the network:

tgtadm --lld iscsi --mode target --op bind --tid 1 -I 10.44.1.0/24

- Save the configuration:

tgt-admin --dump > /etc/tgt/targets.conf

Attaching new VM storage to the Proxmox cluster

- Go to Proxmox management interface.

- Click Datacenter in the treeview.

- Select the Storage tab.

- Click Add -> iSCSI

- Set the ID to

${STORAGE_SERVER_HOSTNAME}-vm-storage-iscsi(e.g.proxstore-vm-storage-iscsi). - Enter portal (the IP of the storage server)

- Select the desired iSCSI target from the target dropdown.

- Uncheck the checkbox for Use LUNs directly.

- Click add.

- Next, click Add -> LVM

- Set the ID to

${STORAGE_SERVER_HOSTNAME}-vm-storage-lvm(e.g.proxstore-vm-storage-lvm). - For Base storage, choose the iSCSI volume you added previously.

- Check the checkbox for Shared.

- Click Add.

Attaching new ISO storage

- Go to Proxmox management interface.

- Click Datacenter in the treeview.

- Select the Storage tab.

- Click Add -> NFS.

- Set the ID to

${STORAGE_SERVER_HOSTNAME}-iso-storage-nfs(e.g.proxstore-iso-storage-nfs). - Enter the IP of the storage server.

- Select the export from the Export dropdown.

- In the Content dropdown, select ISO and deselect Images.

- Set Max Backups to 0.

Flytta diskbild till ny lagringslösning

Om du vill flytta en disk från det interna zfs-filsystemet (local-zfs) till en av Ceph-poolerna (trillian-vm eller trillian-vm-ssd) så kan det göras via webbgränssnittet. Om diskbilden som ska flyttas ligger på en av Ceph-poolerna så blir processen mer invecklad. Ett exempel med en flytt från trillian-vm till trillian-vm-ssd (hur rag flyttades):

- Stoppa det VM vars disk ska flyttas.

- Se till så att det inte finns några snapshots.

- ssh:a in på servern som VM:et har körts på.

- Kör: /usr/bin/qemu-img create -f raw 'rbd:vm_ssd/vm-114-disk-1:mon_host=10.44.1.98;10.44.1.99;10.44.1.100:auth_supported=cephx:id=caspian:keyring=/etc/pve/priv/ceph/trillian-vm-ssd.keyring' 15G

- Kontrollera att den nya diskbilden finns med: rbd list vm_ssd -c /etc/ceph/ceph.conf -k /etc/pve/priv/ceph/trillian-vm-ssd.keyring --id caspian

- Flytta disken med: /usr/bin/qemu-img convert -p -n -f raw -O raw -t writeback 'rbd:vm/vm-114-disk-1:mon_host=10.44.1.98;10.44.1.99;10.44.1.100:auth_supported=cephx:id=caspian:keyring=/etc/pve/priv/ceph/trillian-vm.keyring:conf=/etc/ceph/ceph.conf' 'zeroinit:rbd:vm_ssd/vm-114-disk-1:mon_host=10.44.1.98;10.44.1.99;10.44.1.100:auth_supported=cephx:id=caspian:keyring=/etc/pve/priv/ceph/trillian-vm-ssd.keyring:conf=/etc/ceph/ceph.conf'

- Kontrollera diskbilden med: rbd info vm_ssd/vm-114-disk-1 -c /etc/ceph/ceph.conf -k /etc/pve/priv/ceph/trillian-vm-ssd.keyring --id caspian

- Konfigurations filen för VM:en måste redigeras för att den ska använda den nya diskbilden: nano /etc/pve/qemu-server/101.conf

- Nu kan du starta VM:en igen.

- Ta bort den gammla diskbilden när du känner dig säker.